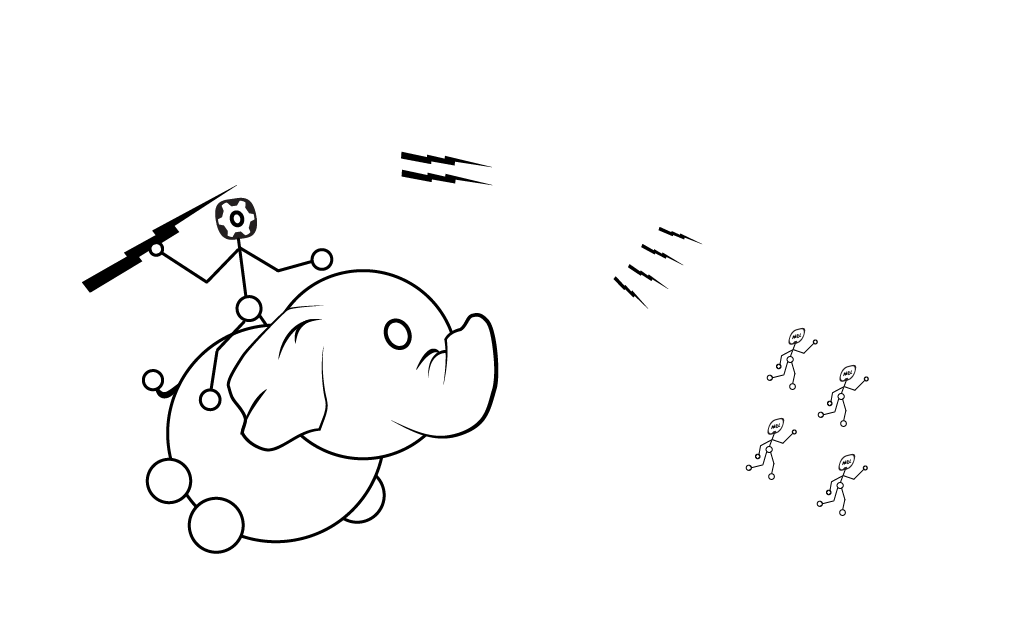

This Apache project deserves its own mention here, due to the combination of its widespread use and its sheer effectiveness. In layman's terms: you tell Spark what you want to do to some data and it creates a digital sweat shop and chunks out portions of that data to the individual workers that all do their jobs in parallel, then reassembles those processed chunks into a finished product. Not very sexy (and installing it and administering it in its native Linux enviro is the complete opposite of sexy), but it makes dealing with HUGE datasets possible and in some cases even trivial.

Spark sits on top of a HDFS (Hadoop distributed file system) cluster- which, conveniently, is the same technology underpinning data lakes (you may be able to put 2 and 2 together there). The cloud big boys have finally gotten around to supplanting some old school data migration processes with Spark natively, and it's making a huge difference in the move-relational-tables-around world.

Hard to mention Spark without also mentioning Databricks (the company started by the people who created Spark). They are the primary contributors to the Spark project; and their paid service abstracts away a lot of the bullshit involved in provisioning, administering, and configuring Spark (seriously, it is zero fun).

So if you need to parallel process something; if you have massive quantities of data, now you know what digital tree to bark up.