Artificial Intelligence and Machine Learning are the hottest buzzwords around, have been for years. I've heard c-suiters making some of the most bizarre and asinine comments in earnings calls about these technologies- and obviously those talking heads don't understand them. But my God man, it's 2018. It's time that even the golden-passport-wielding, 'I came from MBB' hurr durr leadership crowd starts to understand at least the rudimentary aspects of these things that are creeping into every part of our daily lives.

Color me jaded, but petroleum engineers have been implementing iterative learning algorithms since 1949.

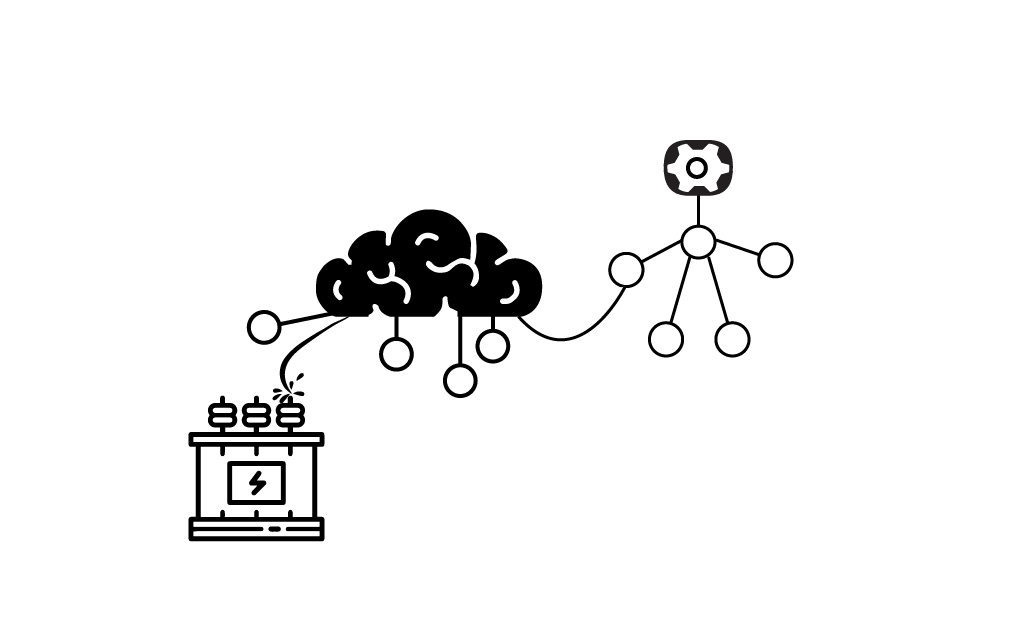

Here's the thing about AI, it basically comes in 3 forms: machine learning, deep learning, and neural networks. Technically speaking, neural networks don't belong at this level of the hierarchy, they're a component of machine learning- however the way they work is unique apart from the other methods. They're scary accurate, but tell you absolutely fuck all about how or why they arrived at their answer…so not that great for industry, where you need some insight as to why a machine has predicted what it has- or at the very least, how. Deep learning is just neural networks working in conjunction and on top of each other; so it has about as much use for non-academic pursuits as its constituent components.

Which leaves us with just plain ole fancy but vanilla machine learning methods. Of that, we have two categories: supervised (the sexy kind) and unsupervised (the weird academic kind that gets very hard to explain). Drilling down into supervised learning, we have classification (yes or no, good or bad, buy or don't buy) or regression (we're looking at numerical ranges here instead of discrete/binary options). The big thing is that in supervised learning, which dominates the field of ML, most work in that area is done in classification (specifically naïve Bayesian) which is of very limited value to industrial applications.

When we talk or hear about ML, especially in industry, the vast majority of the time we're talking about one model- the random forest. In of itself, a random forest is just an ensemble of decision or regression trees - meaning one split decision between .7 and 1.56 or between left and right…and those decisions become 'right' or 'wrong'….and the machine learns. It keeps fucking up, again and again, but very quickly- and then, it reaches a point that it doesn't get any more correct.

That's it.

That's machine learning.

How hard is that?